CACHE

Direct Mapped

Non-volatile Memory

- Storage that maintain information even without power

- ROM (Read - only memory)

- Store persistent information like file

Volatile

- Loses information when electrical power is interrupt

- RAM (Random Access Memory)

- Main storage of Process Memory discussed

Dram

- Stores a single Bit

- High density

- Can pack a lot together to form many bits

- Access latency is very slow

- Energy consuming due to recharging of battery often as the memory will decay

- Delivers memory on positive and negative edge of a clock

=>We have a memory problem where the CPU would want more memory than the memory can give it.

Sram

- 6 transistors per memory cells

- Low density

- Fast Latency access (0.5 - 5 ns)

Slow Memory: Magnetic Disk

- Super Slow

- Latency (4-10ms)

- Latency (4-10ms)

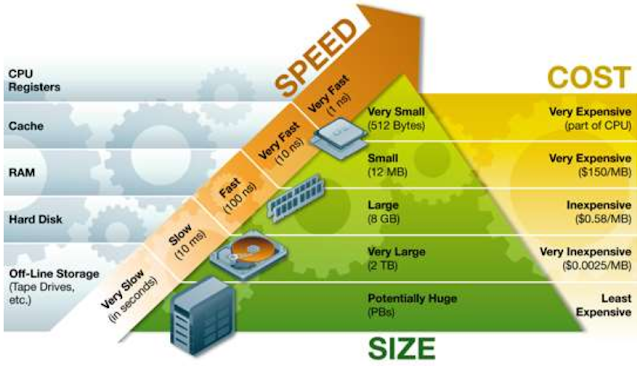

Quality vs Quantity

Components:

- Hard disk

- Hard disk

- Memory (Dram)

- Register

- Big fast memory

- 1GB

- Access 1ns time

Key concept:

Use one whole hierarchy of memory technolgy

-> Fast next to CPU

-> Larger further away from CPU

Cache

- Keep the frequent and recently use data in the SRAM

- Access the bigger one only if you can't find it in the SRAM

Principle of Locality

- Program access only a small portion of the memory address space within a small time interval

Temporal Locality

When reference, the item tend to be reference again soon

e.g variables like int i, loops

Spatial locality

When reference, will tend to reference the nearby memory

e.g Arrays access, instructions

Usage and Terminology

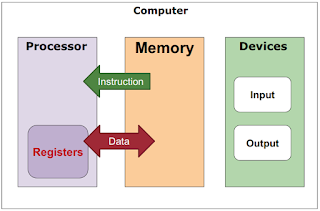

1. Processor check if data in cache

2. Cache hit: Found data in cache

- Data is return to processor from cache

- Fast

Cache Miss: Data not in cache

- Data is loaded from memory

- Large chunk is loaded for future references

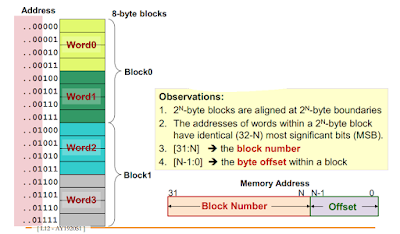

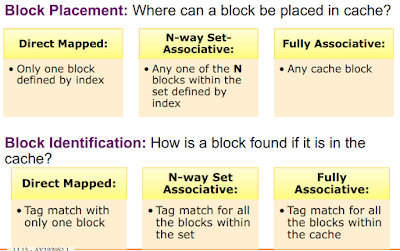

Cache Mapping

Cache Block/line

- Unit of transfer between memory and cache

Block size is typically more than 1 word (4 bytes)

16 byte block = 4 word block

32 byte = 8 word block

When we transfer, we classify each word in blocks and transfer it. If we need one byte in one word, we transfer the entire block.

To find which block the byte is in:

byte(10) divide by 8

or byte(2) right shift 3

To find where the byte is in the block:

The last 3 digits in the binary of the byte number (the memory starts from 0)

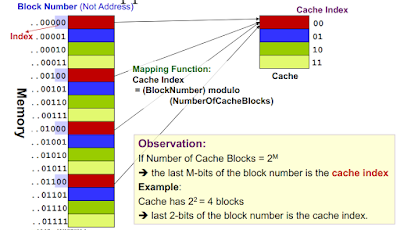

Direct Mapping

Do round robin to map to SRAM

Each time do from 0 to 3

Cache index (dec) = blockNum(dec) % (numberOfCacheBlocks)

Cache index (bin) = blockNum last (numberOfCacheBlocks) bits

Cache index (bin) = blockNum last (numberOfCacheBlocks) bits

The number of cache blocks = 2^m where m is the last m bits of the block number is the cache index

Unique identifier

We need to know which memory is inside the cache, using a unique tag number that can identify which block is which

tag = blockNumber/NumberofCacheBlocks

Cache contains:

1. Tag of memory block further identifies the memory in cache

2. Valid bit indicating whether the cache line contains loaded data

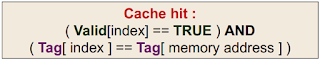

Cache hit: 6.

Cache-> 16KB

Memory -> 4GB

1 Block = 16 bytes

Memory load Instruction

LOAD

Given a

lw $t0, 0($0)

The 0($0) is Mem Access

1. Check index field

2. Go to index number and check if the location matches what we are trying to look for, Check valid

3. Check the tag ensure its the correct memory block

4. Cache miss if any of condition fails and cache hit if condition met.

4. Cache miss if any of condition fails and cache hit if condition met.

CACHE MISS

5. If its a cache miss,

- this a cold compulsory miss if it's the first time we are looking at it

- Conflict if it was there before but was replaced.

6. Load 16 byte from the memory, the last 4 bits is empty because we are loading the cache boundary

7. Load 4 words and return the word the register asking for by using the offset

e.g offset -> 0100 -> drop last two bits -> 01 -> load word 1

8. Set the valid to 1

9. Set tag

CACHE HIT

5. Return the word to register by converting the offset

e.g offset -> 1100 -> drop last two bits -> 11 -> load word 3

But if we have a cache miss and there is a value in the blocks, we will do cache eviction.

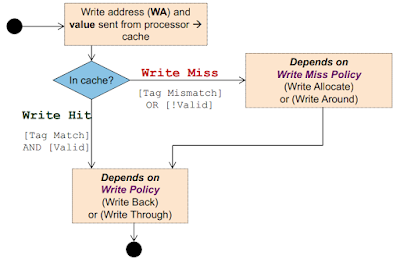

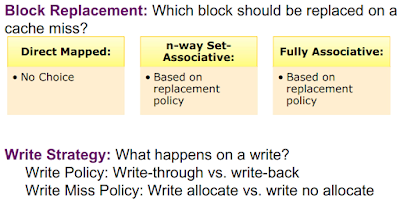

STORE (Write Policy)

Modified data in cache but not in memory.

Write through Cache

Write data both to cache and main memory (Parallel)

Problem: Operate same speed as main memory

Solution: We will use a buffer called write buffer to run together

Processor: Write data to cache and main memory

Memory controller: Write contents of buffers to memory

Write back Cache

Write to cache only

Only write to memory when evicted

Only write to memory when evicted

Problem: There will be a large memory overhead if all blocks evicted is replacement

Solution:

Solution:

Use Dirty Bit to check if its being changed (extra bit), only write back to memory when dirty bit is 1

Handling Cache Misses (Store into address)

It is not inside the cache but we want to write it. Loading it again might evict previous and it will take some time to load it.

We do not need to see the previous value.

Write allocate

Load the complete block to cache

Change only the require word in cache

Follow write policy

Write around

Do not load the block to cache

Write directly to main memory only

Set Associative and Fully Associative

Cache Performance

Hit: Data is in cache

Miss: Data is not in cache

Quantifying it:

Hit rate: Fraction of mem address that hit

Hit time: Time to access Cache

Miss rate: 1- hit rate

Miss penalty: Time to replace cache block + deliver data (stop when we go back to processpr)

Average Access time = hit rate * hit time + (1-hitRate) * miss penalty

This will get the average performance of our system.

E.g

Suppose:

SRAM =0.8ns access time (Cache)

DRAM = 10ns access time (mem)

How high a hit rate we need to sustain avg access time of 1ns?

1ns = 0.8*H + (1-H) * 10

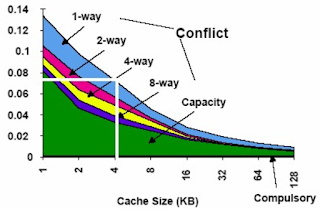

Type Of Misses

Compulsory/Cold Miss

Access the memory the first time.

Very hard to avoidable.

Solution 1: Make the block size bigger so we have a largest range.

- Reduce miss rate

- Takes longer time to bring it to the cache (miss penalty will increase)

- Too few cache block space thus miss rate will go up eventually due to conflict miss.

Conclusion: Its useful for earlier but eventually miss rate will be the same.

Conflict miss

Wanted memory was previously in cache but was replaced by another memory.

Beware because it might not be a conflict miss if I access the memory even if it is the same location. [Lecture video - 8/10]

Beware because it might not be a conflict miss if I access the memory even if it is the same location. [Lecture video - 8/10]

Solution 1: N way Set associative

Give more slots per set that starts with a certain

Memory block can be place in a fixed number of location (N>1) in cache

- Multiple duplication

- Memory block maps to an unique sets

- Search: search all n location within the set

- For same cache size, conflict miss goes down with increasing associativity

e.g 2 way set associative cache

there are 2 cache blocks in one set. Any mem block can be map into either of the cache blocks

Each block contains

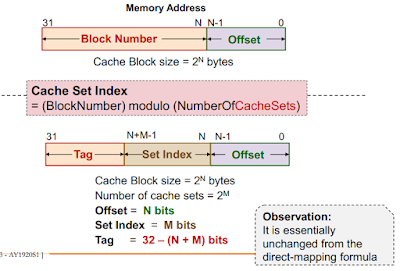

- block number

- offsets

- set index (The set index is used to determine which duplicate to place the memory in)

- block number

- offsets

- set index (The set index is used to determine which duplicate to place the memory in)

There are now unique number of sets.

Given the memory

N = offset in bits

M = set index

N = offset in bits

M = set index

1. Check the number of blocks = 2^ block number, block number = 32 - N

2. No. of cache blocks = No. of bytes in cache/ No. bytes per block

e.g Cal number of cache blocks = cache / 4

e.g Cal number of cache blocks = cache / 4

3. No. of Sets = No. of cache blocks/No. of ways

e.g No. of cache block/4

4. Get set index by putting the No. of sets to 2^M

where M is set index.

where M is set index.

5. Cache tag = 32 - M - N

Advantages:

Rule Of Thumb:

A direct mapped cache of size N has about the same miss rate as a 2 way set associative cache n/2

(On avg)

Memory Load Instruction - Set associative

1. Look at valid and tag valid

2. Bring block into cache and favor the first block

3. Set the tag and valid

4. Load from M[n] and M[n+4]

5. Depending on offset, if the msb is

1 - load to w1

5. Depending on offset, if the msb is

1 - load to w1

6. Can load the content to processor

Solution 2: Fully Associative cache

The memory block can go to any location in the cache as long as there's space

- Searching is difficult (Search for everyblock)

- Conflict miss is 0

Since there's no indication, the tag is the entire block number.

Capacity Miss (only for FA)

The cache is smaller than the memory so we will run out of space eventually.

cache space < memory space

- For same cache size, Capacity miss remains the same irrespective of associativity

- Capacity miss decreases with increasing cache size.

Total miss = cold miss + conflict miss + capacity Miss

CAP Miss (FA) = total miss (FA) - Cold Miss(FA)

Block Replacement Policy

In SA and FA, we need to place a mem block.

- Which block to kick

LRU (Least recently used)

- Check the one who is not been used recently

- Record the block that was accessed

- kick the one who was LRU

- Promote each time we use the value

Issues:

- Hardware problem

- Hard to keep track if there are many block

FIFO

- Kill the one who went in first

- Second chance variant

- Put it back if its used

- Put it back if its used

- If two block is used, kill the older

RR(Random replacement)

- Randomised

LFU(Least frequently used)

C = cache capacity

b = block size

B = number of blocks

N = degree of associativity

S = number of set

tag_bits

set_bits (also called index)

byte_offset

v = valid bitsB = C/b

S = B/N

b = 2^(byte_offset)

S = 2^(set_bits)|___tag________|____set___|___byte offset_|