Priority Queue

Its automatically sorted and sorted based on the comparator provided. Technically, a heap is a priority queue..

Problem:

Each patient is assigned a priority score and the doctors will look at the patients that needed help first.

What operations do we need to support?

Operations:

insert(x) : inserts x

max() : returns element with the highest priority

extractMax() : returns and remove the highest priority element

size() : returns the size of the queue

buildHeap(A) : creates a priority queue from an array of patients

insert(x) : inserts x

max() : returns element with the highest priority

extractMax() : returns and remove the highest priority element

size() : returns the size of the queue

buildHeap(A) : creates a priority queue from an array of patients

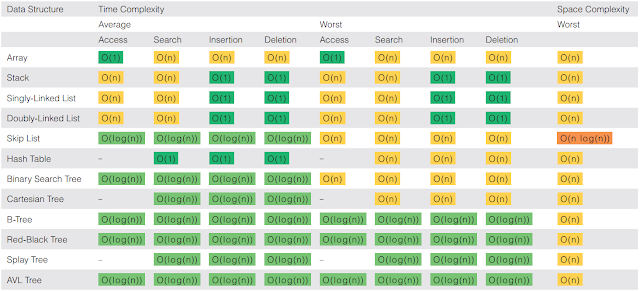

Whats the worst case time complexity of insert followed by extractmax if we use a singly linked

list

n+ n = 2n = o(n)

->We have to scan through the to find the max (or next maximum) or insert have to maintain sorted order

->We have to scan through the to find the max (or next maximum) or insert have to maintain sorted order

What is the worst case time complexity of insert followed by extractMax if we use an array?

O(logn)

->Because the cher scammed us, we are using a heap

Both insert and extractMax uses logn because every time we added/take we need to check heap rule violation

Both insert and extractMax uses logn because every time we added/take we need to check heap rule violation

Exploiting Structure

Maintaining order in a data structure is not free, We still have to pay for time/ memory

Unsorted Array:

Insert -> O(1)

Max - > O(n)

Search -> O(n)

Sorted Array:

Insert -> O(n)

Max - > O(1)

Search -> O(logn) //binary search

Binary Heap:

Insert -> O(logn)

Max - > O(1)

Search -> O(n)

Binary Tree

A binary tree is not a binary search tree. It can be sorted or unsorted as long as it is following this structure:

A binary tree can have at most 2 children.

Values can be repeated.

Height of Node: Number of edges on the longest path from node to a leaf (from its children)

Depth of Node: Number of edges to the root. (from the root)

Edges: Each arrow

The height of the tree is equal to the dept of its deepest node.

Depth of Node: Number of edges to the root. (from the root)

Edges: Each arrow

The height of the tree is equal to the dept of its deepest node.

The height of a node is the number of edges on the longest path from node to a left

We do not count the first node as part of the height

Complete Binary Tree

The tree is perfectly balance, except for bottom level.

All levels are completely filled except possibly last level where the keys are filled from left to right.

The height h of a complete binary tree is {logn} <- floor

where n is the number of values in the tree.

Binary Heap

A binary heap should have each parent be larger/smaller or equal than the child depending if its Max/Min heap respectively.

Tree Representation

Class Node

Object key

Object data

Node leftchild

Node rightchild

Node parent

Object key

Object data

Node leftchild

Node rightchild

Node parent

Array Representation

Indices start at 1

Takes nodes in level order

There's no need to link

Every parent with index n will have its child being n*2 (left) or n*2+1 (right)

Sink and Swim

Swim (Shiftup, Bubbleup, IncreaseKey)

To go up the heap

Problem: If the key becomes larger than the parent

Solution:

- Swap Child with parent

- Repeat until heap order is restored

PseudoCode for array implementation

function swim(A, k)

while (k > 1) and A[k/2].key < A[k].key

swap(A[k], A[k/2])

k = k/2

while (k > 1) and A[k/2].key < A[k].key

swap(A[k], A[k/2])

k = k/2

Sink (ShiftDown, BubbleDown, Heapify)

To go down the heap

Problem: If key is smaller than the one or both of its child

Solution:

- Swap with the larger child

- Repeat until the order is restored

Pseudocode

function sink(A, k)

while (2k <= n) //number of items in subheap

j = 2k

if (j<n and (A[j].key < A[j+1].key)) j++

if not (A[k].key < A[j].key), break

swap(A[k], A[j])

k = j //change index

while (2k <= n) //number of items in subheap

j = 2k

if (j<n and (A[j].key < A[j+1].key)) j++

if not (A[k].key < A[j].key), break

swap(A[k], A[j])

k = j //change index

Extracting the largest value

When extracting the max/min for a max/min heap sort respectively, we must

1. Swap the last index with the first (The first is assumed the largest)

2. Remove the last index (pop)

3. Sort

Sorting a binary heap takes logn time

Insertion of a binary heap cost logn

-> Add node o(1), swim/sink o(logn)

-> Swim/sink is logn because its traversing not the whole tree

-> Swim/sink is logn because its traversing not the whole tree

ExtractMax takes up to O(logn)

-> We need to sort (swim/sink) again

Building a binary heap cost n time

To put in -> n //theres n items

To sort -> N //still need to traverse

n+n => O(n)

Robert Floyd's creation:

- View the input array as binary tree

- Bottom up fixing of the tree to satisfy maxheap property

- Make use of recursion to go from bottom to up, viewing each 2 sub heap as wanting to merge together.

- Make use of recursion to go from bottom to up, viewing each 2 sub heap as wanting to merge together.

If we want to merge a root to two heaps, we combined them and we sink

Its N time because the sorting of the binary heap still has to iterate every element and checking its parents by taking childindex/2.

The iteration goes from the back of the binary heap to the front.

PseudoCode:

function buildHeap(A)

for i from A.length/2 to 1 //i is the counter for index

sink(i)

//its counting down

we realised that the divide by 2 ensures that we will only look at the elements that are not the last element.

The iteration goes from the back of the binary heap to the front.

PseudoCode:

function buildHeap(A)

for i from A.length/2 to 1 //i is the counter for index

sink(i)

//its counting down

we realised that the divide by 2 ensures that we will only look at the elements that are not the last element.

There will never be any violation at the last height of the heap (one element heap) so we don't need to iterate the , but as the height increase, there is a more amount of work done because we need to check each child at the bottom if the rule is violated.

At level h, there are 2^(H-h) nodes so work down per level is ch2^(H-h), where H is total height

HeapSort

The computational complexity of sorting using a binary heap is O(nlogn), space is O(n)

function heapsort(A)

n = length(A)

sorted = new Array[n]

binheap = buildHeap(A)

for i = n-1 to 0 //iterate n times

a = binheap.extractMax() //log n times

sorted[i] = a

But we can change the space to O(1)

n = length(A)

sorted = new Array[n]

binheap = buildHeap(A)

for i = n-1 to 0 //iterate n times

a = binheap.extractMax() //log n times

sorted[i] = a

But we can change the space to O(1)

- Take the largest element, swap with last index

- Move pointer down

- Move pointer down

- Ensure heap not violated without including sorted section

- Repeat 2

- Stop until reach first index

- Stop until reach first index

function heapsort(A)

n = A.length

n = A.length

// create the binary heap

for i = n/2 to 1 //takes n time

sink(A, 1, --n);

for i = n/2 to 1 //takes n time

| sink(A, k, n)// swap and sink while (n > 1) //takes logn swap(A, 1, n); |

Space complexity is O(1), Swap and sink takes O(nlogn)

-> Creation is O(n)

-> Swap and Sink O(nlogn)

-> Creation is O(n)

-> Swap and Sink O(nlogn)

HeapSort Compared to Quicksort

Heapsort is optimal for time and space but

- Inner loop is longer than quicksort

- Bad Caching (Children are far from parent)

- Not stable

Quicksort is still faster in practice because it does not do unnecessary swaps

MinHeap

The top is the minimum item.

- Negate all keys

- Change comparator

- Instead of sink we swim (Possible)IntroSort (Additional Info)

- Run quicksort

- Cutoff to heapsort if stack depth exceeds 2logn

- Cutoff to insertion sort for n=16

This is nlogn

Summary:

<Prev Next>